Introduction

Apache Kafka, developed by LinkedIn, is a widely used real-time data streaming software. In this deep dive, we will explore the fundamentals of Kafka and its practical applications. To better understand Kafka, let's consider a practical example involving food delivery.

Real-Time Location Tracking in Food Delivery:

Imagine ordering food from a platform like Zomato, where you can track the real-time location of your assigned delivery partner. From an engineering perspective, this feature requires continuous updates of the delivery partner's location as they move from point A to point B.

The Engineering Behind Real-Time Location Tracking: To design a similar application or clone, we need to consider the following steps:

Obtaining the Current Location: Using the "get" command, we can retrieve the current location of the delivery partner from their phone. This location data will serve as the starting point (point A) for tracking their movement.

Continuous Location Updates: Every second, the delivery partner's location is captured and sent to Zomato's server. This ensures that the customer receives real-time updates on the delivery partner's movement.

Simultaneous Updates for Customers: Alongside sending the location updates to Zomato's server, the same information is also provided to the customers. This allows them to track the progress of their food delivery in real-time, providing a sense of satisfaction and anticipation.

The Role of Apache Kafka: Now, you might wonder how Apache Kafka fits into this scenario. Kafka acts as a distributed streaming platform that enables the real-time processing of data streams. It serves as a reliable and scalable backbone for handling the continuous flow of location updates in our food delivery example.

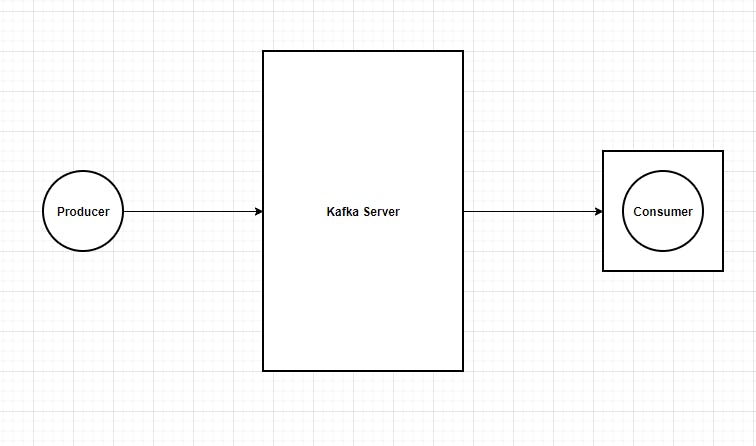

Kafka's Key Concepts: To fully grasp Kafka's functionality, let's briefly explore its key concepts:

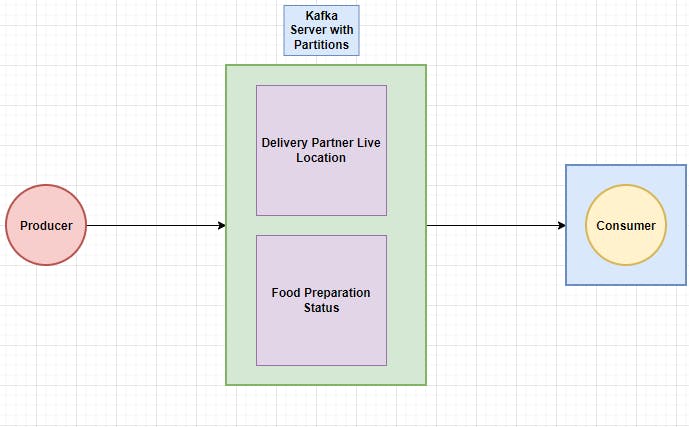

Topics: In Kafka, data is organized into topics, which represent specific streams of information. In our example, we can have a topic dedicated to tracking the delivery partner's location.

Producers: Producers are responsible for publishing data to Kafka topics. In our case, the agent's phone acts as a producer, continuously sending location updates to the Kafka topic.

Consumers: Consumers subscribe to Kafka topics and retrieve data from them. In our example, Zomato's server and the customers' devices act as consumers, receiving real-time location updates.

Brokers: Kafka brokers serve as the intermediaries between producers and consumers. They handle the storage and replication of data across multiple servers, ensuring fault tolerance and scalability.

Real-Time Data Processing and High Throughput in Apache Kafka

In scenarios where real-time data processing and high throughput are crucial, Apache Kafka shines as a reliable solution. Let's explore a few examples to understand how Kafka handles such demanding use cases.

Food Delivery Application: In our previous example, we discussed how Kafka enables real-time location tracking in food delivery. With Kafka, the agent's phone acts as a producer, continuously sending location updates to Kafka topics. Simultaneously, consumers, such as Zomato's server and customers' devices, receive these updates in real-time. Kafka's ability to handle high throughput ensures smooth data flow, even with a large number of delivery partners and customers.

Chatting Application: Consider a popular chatting application like Discord, where thousands of users are engaged in real-time conversations. In this case, every message needs to be inserted into the database and emitted to all other users. With Kafka, the insertion process can be optimized for high throughput, ensuring minimal delay in delivering messages to all participants. Kafka's distributed architecture allows for efficient handling of the high volume of messages generated by a large number of users.

Ride-Hailing Services: Ride-hailing platforms like Ola and Uber rely on real-time data processing and database operations to provide a seamless experience. These services involve multiple services running simultaneously, curating data, and storing it in the database. Each service performs specific tasks, such as calculating fares, monitoring driver behavior, or analyzing real-time speed. Kafka's ability to handle high throughput ensures that these services can process data from a vast number of drivers in real-time, enabling efficient operations.

Collaboration Tools: Real-time collaboration tools like Trello and Figma require simultaneous real-time updates and database operations. Users need to see changes made by others in real-time while ensuring data consistency and persistence. Kafka's distributed streaming platform allows for efficient synchronization of updates across multiple users and ensures that database operations can be performed seamlessly, even in high-throughput scenarios.

Kafka and Databases: Complementary Solutions for High Throughput and Storage

Kafka's High Throughput and Temporary Storage: One of Kafka's key strengths is its ability to handle high throughput. It can efficiently process and transmit large volumes of data in real-time. However, it's important to note that Kafka's storage is temporary and not designed for long-term data persistence. Instead, Kafka acts as a buffer or a streaming platform that facilitates the smooth flow of data between producers and consumers.

The Role of Databases: Databases, such as PostgreSQL and MongoDB, play a crucial role in storing and managing data for long-term use. They provide durable storage, indexing mechanisms (e.g., B-trees, B+ trees), and efficient query processing capabilities. Databases are designed to handle large volumes of data and enable fast retrieval of information through queries.

Complementary Nature of Kafka and Databases: Kafka and databases are not alternatives to each other but rather complementary solutions. They are often integrated and used together to leverage their respective strengths. Let's consider an example to illustrate this:

Example: Uber's Data Processing Pipeline Imagine Uber, with a fleet of 100,000 cars, generating 10 parameters of data per car. Storing this massive amount of data directly into a database like PostgreSQL could pose throughput challenges. To address this, Kafka can be introduced as an intermediary layer.

In this scenario, the car data is produced by the cars themselves and sent to Kafka topics. Kafka acts as a high-throughput streaming platform, efficiently handling the continuous flow of data. Consumers, such as data processing services or analytics engines, can subscribe to these Kafka topics and process the data in real-time.

To ensure long-term storage and enable efficient querying, the processed data can then be bulk-inserted into the database. This bulk insert operation, which occurs at regular intervals (e.g., every few seconds), mitigates the throughput issues associated with individual inserts. Additionally, Kafka's real-time nature ensures minimal lag in data availability.

Self/Auto-Balancing Mechanism

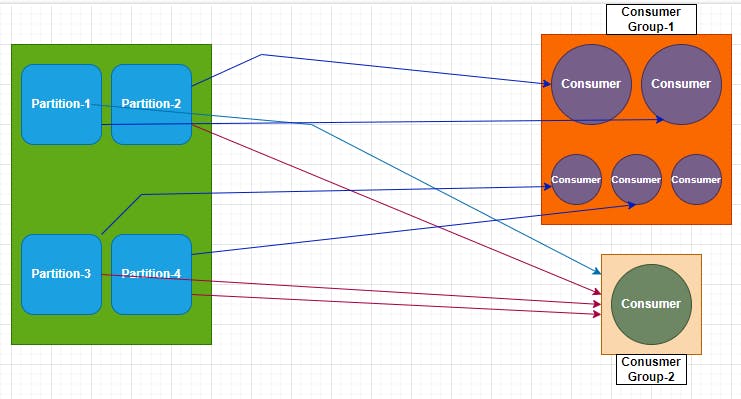

Consumer groups are an essential concept in Apache Kafka that allows multiple consumers to work together to consume messages from one or more topics. By using consumer groups, Kafka ensures that each message in a topic is consumed by only one consumer within a group, enabling parallel processing and load balancing.

In a consumer group, consumers can be organized into different groups, and each group can have one or more consumers. When a message is produced for a topic, Kafka assigns it to a specific partition. Each partition can be consumed by only one consumer within a consumer group. This ensures that multiple consumers within the same group can work together to process messages in parallel.

Let's consider an example to understand how consumer groups work. Suppose we have a Kafka topic with four partitions and two consumer groups: Group 1 with five consumers and Group 2 with one consumer. When the consumers start consuming messages, Kafka's auto-balancing feature ensures that each partition is assigned to only one consumer within Group 1. This means that each consumer in Group 1 will be responsible for consuming messages from one partition.

In this scenario, all four partitions will be assigned to the five consumers in Group 1, leaving one consumer idle. Once all the partitions are assigned to Group 1, Kafka will move on to Group 2 and assign the remaining consumer to consume messages from the available partitions.

Consumer groups provide several benefits. Firstly, they enable parallel processing of messages, as each consumer within a group can work independently on its assigned partition. This allows for efficient and scalable message processing. Secondly, consumer groups ensure fault tolerance. If a consumer within a group fails, Kafka automatically reassigns its partitions to other active consumers within the same group, ensuring uninterrupted message consumption.

In summary, consumer groups in Kafka allow multiple consumers to work together to consume messages from one or more topics. They enable parallel processing, load balancing, and fault tolerance, making them a crucial feature for building scalable and reliable data processing systems.

Best Practices while working with Kafka

When using Kafka, there are several best practices to keep in mind to ensure optimal performance and reliability. Here are some key considerations:

Plan your topic and partition design carefully based on your use case and expected data volume.

Batch and compress messages to improve throughput and reduce network overhead.

Use asynchronous message production to maximize throughput and minimize latency.

Utilize consumer groups to scale horizontally and distribute the load across multiple consumers.

Monitor consumer lag to ensure timely processing and avoid falling behind.

Set up monitoring and alerting for key Kafka metrics such as throughput, latency, and consumer lag.

Enable encryption for data in transit using SSL/TLS to protect sensitive information.

Implement authentication and authorization mechanisms to control access to Kafka clusters.

Estimate the expected data volume and throughput to determine the required hardware resources.

Configure replication and regularly back up Kafka data and metadata for disaster recovery.

Remember to adapt these practices to your specific use case and consult the official Kafka documentation for more detailed information.